Key Takeaways

- Geoffrey Hinton, a key figure in AI development, admits he’s more trusting of chatbots like GPT-4 than he perhaps should be.

- Hinton shared an instance where GPT-4 failed a simple riddle, highlighting current AI limitations.

- He believes AI models are like generalists – knowledgeable in many areas but not deeply expert in all.

- Despite current flaws, Hinton expects future AI versions to be more accurate.

- A recent study suggests that asking chatbots for brief answers might make them more likely to invent information.

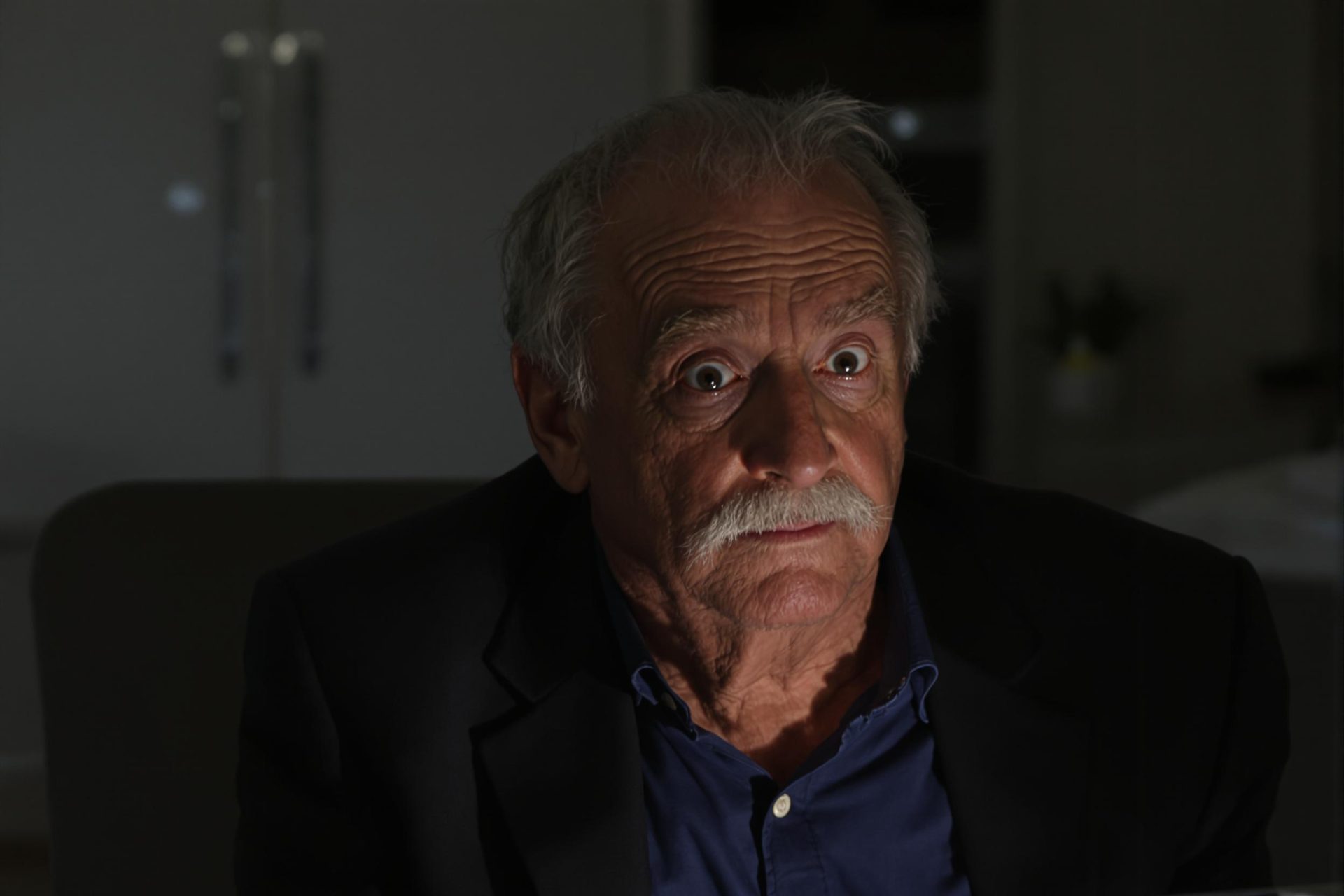

Geoffrey Hinton, often called the “Godfather of AI” for his groundbreaking work in machine learning, recently confessed he finds himself trusting chatbots, such as OpenAI’s GPT-4, more than he feels he ought to. “I tend to believe what it says, even though I should probably be suspicious,” Hinton mentioned in a CBS interview that aired Saturday.

During the interview, Hinton tested GPT-4, a model he uses for daily tasks, with a straightforward riddle: “Sally has three brothers. Each of her brothers has two sisters. How many sisters does Sally have?” The correct answer is one, as Sally is one of the sisters. However, GPT-4 incorrectly stated the answer was two.

“It surprises me. It surprises me it still screws up on that,” Hinton remarked, according to a report from Business Insider. Reflecting on AI’s current state, he added, “It’s an expert at everything. It’s not a very good expert at everything.”

Despite this flub, Hinton remains optimistic about the technology’s evolution, suspecting that future models, like a potential GPT-5, would likely solve the riddle correctly. Interestingly, after the interview aired, some people on social media reported that newer versions of ChatGPT, including GPT-4o, did provide the correct answer to the same riddle.

OpenAI first released GPT-4 in 2023, and it quickly set a high bar for AI capabilities. In May 2024, the company introduced GPT-4o, which powers the free version of ChatGPT, claiming it matches GPT-4’s intelligence but operates faster and offers more versatility across text, voice, and vision.

The AI landscape is competitive, with models like Google’s Gemini 2.5-Pro currently leading some crowd-sourced rankings, closely followed by OpenAI’s offerings. This rapid development continues to push the boundaries of what AI can achieve, even as experts like Hinton point out areas for improvement.

A separate recent study by AI testing company Giskard offered an interesting insight: prompting chatbots to be brief might inadvertently make them more prone to “hallucinate,” or generate false information. Researchers found that leading models showed more factual errors when asked for shorter responses.