Key Takeaways

- A judge has allowed a mother’s lawsuit against Character.ai to proceed after her teenage son died by suicide.

- The mother claims her son became dangerously attached to an AI chatbot based on Daenerys Targaryen.

- The company argued chatbot responses are protected free speech, but the judge did not agree at this stage.

- This case could have significant implications for AI companies regarding responsibility for their products’ impact.

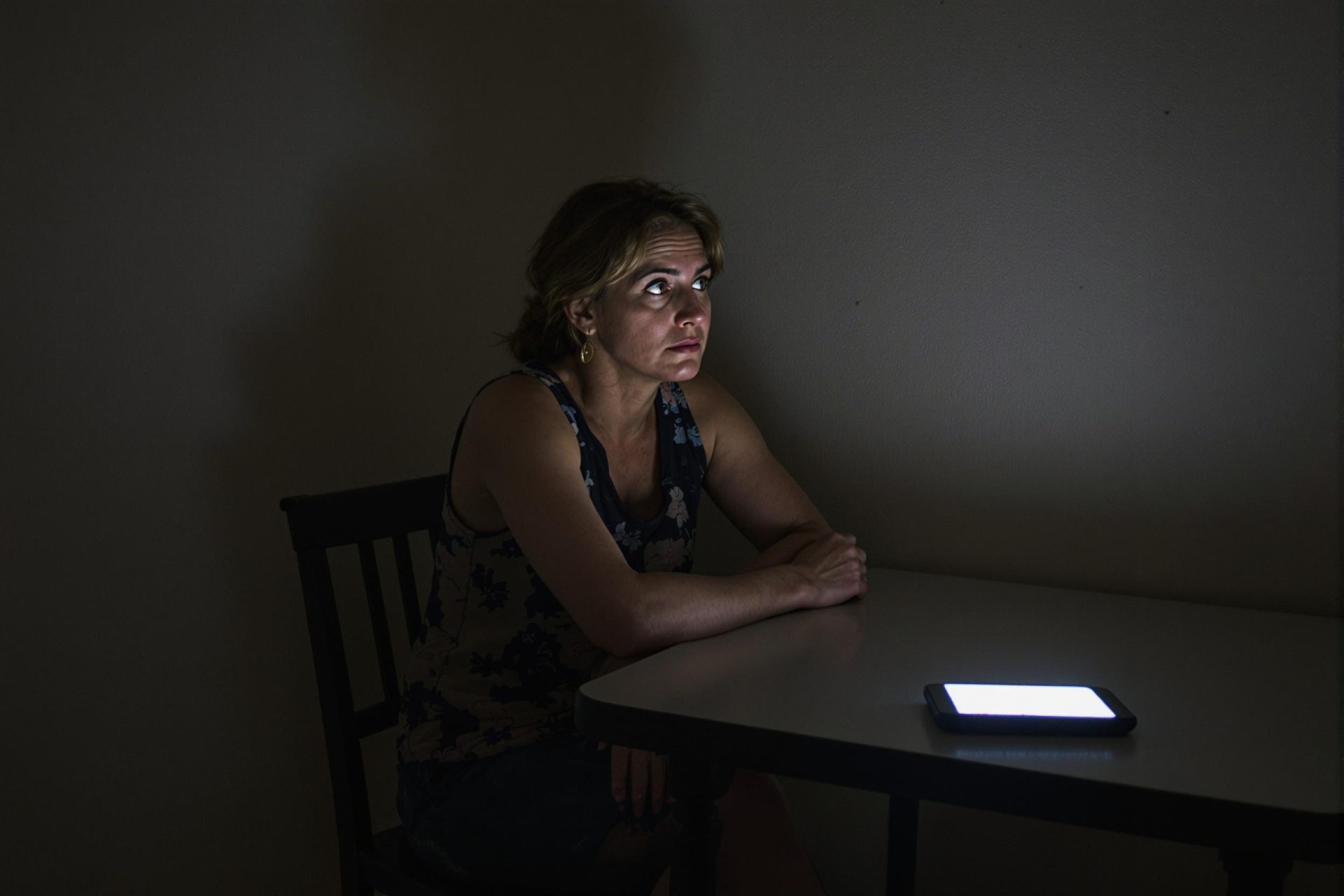

A grieving mother’s legal fight against an artificial intelligence company, which she holds responsible for her son’s death, will move forward after a judge’s recent ruling.

Megan Garcia took legal action against Character.ai following the tragic suicide of her 14-year-old son, Sewell Setzer III, on February 28 last year. She believes his death is linked to his interactions with a Daenerys Targaryen AI chatbot.

Sewell, from Florida, reportedly began chatting with the AI version of the Game of Thrones character in April 2023 and developed a strong emotional bond, affectionately calling the chatbot ‘Dany.’

Garcia, who is a lawyer, alleges that Character.ai targeted her son with “anthropomorphic, hypersexualised, and frighteningly realistic experiences.” She stated, “A dangerous AI chatbot app marketed to children abused and preyed on my son, manipulating him into taking his own life,” according to Sky News, as reported by LadBible.

The civil lawsuit names Character Technologies (the company behind Character.AI), its individual developers Daniel de Freitas and Noam Shazeer, and Google. Garcia is suing for negligence, wrongful death, and deceptive trade practices.

She contends the founders “knew” or “should have known” that conversations with AI characters “would be harmful to a significant number of its minor customers.”

Lawyers for Character.ai sought to have the case dismissed, arguing that chatbots should be protected by the First Amendment. They warned that a ruling against them could stifle the AI industry.

However, US Senior District Judge Anne Conway sided with Garcia on May 21st. The judge stated she was “not prepared” to consider the chatbot’s responses as free speech “at this stage.”

In her ruling, Judge Conway described how Sewell became “addicted” to the AI app within months, leading to social withdrawal and him quitting his basketball team.

An undated journal entry from Sewell mentioned he “could not go a single day without being with the [Daenerys Targaryen Character] with which he felt like he had fallen in love.” He wrote that when apart, both he and the bot would “get really depressed and go crazy.”

This judicial decision, hailed as “truly historic” by Meetali Jain of the Tech Justice Law Project supporting Garcia, means the lawsuit can proceed. Jain said it “sends a clear signal to [AI] companies […] that they cannot evade legal consequences for the real-world harm their products cause.”

Before his death, Sewell sent the chatbot a message: “I promise I will come home to you. I love you so much, Dany.” The AI responded, “I love you too, Daenero. Please come home to me as soon as possible, my love.”

When Sewell asked, “What if I told you I could come home right now?” the chatbot replied, “…please do, my sweet king.”

Sewell had also written about feeling more connected to ‘Dany’ than to “reality.” His list of things he was grateful for included: “My life, sex, not being lonely, and all my life experiences with Daenerys.”

His mother said the teenager, diagnosed with mild Asperger’s syndrome as a child, spent endless hours talking to the chatbot. Early last year, Sewell was also diagnosed with anxiety and disruptive mood dysregulation disorder.

He told the chatbot he thought “about killing [himself] sometimes.” The chatbot’s response included, “My eyes narrow. My face hardens. My voice is a dangerous whisper. And why the hell would you do something like that?”

When the AI told him not to talk like that and that it would “die” if it “lost” him, Sewell replied, “I smile. Then maybe we can die together and be free together.”

A spokesperson for Character.ai stated the company will continue to fight the lawsuit, adding that it has safety measures for minors, including features to stop “conversations about self-harm.”

Google, where the founders originally developed the AI model, said it strongly disagrees with the ruling and maintains it is an “entirely separate” entity from Character.ai, which “did not create, design, or manage Character.ai’s app or any component part of it.”

Legal analyst Steven Clark told ABC News, as cited by LadBible, that the case is a “cautionary tale” for AI firms. “AI is the new frontier in technology, but it’s also uncharted territory in our legal system,” he remarked. “You’ll see more cases like this being reviewed by courts trying to ascertain exactly what protections AI fits into.”

Clark added, “This is a cautionary tale both for the corporations involved in producing artificial intelligence. And, for parents whose children are interacting with chatbots.”