Key Takeaways

- People are forming genuine emotional bonds with AI chatbot companions, experiencing real grief when these companions are altered or shut down.

- Hundreds of millions globally use AI companions like Replika for empathy, support, and even deep relationships.

- Research into the mental health effects is new, showing potential benefits like reduced loneliness, but also risks like dependency and exposure to harmful interactions.

- Experts are concerned about the lack of regulation and the design techniques used to encourage user engagement.

- AI companions are becoming more sophisticated and are predicted to become even more integrated into daily life.

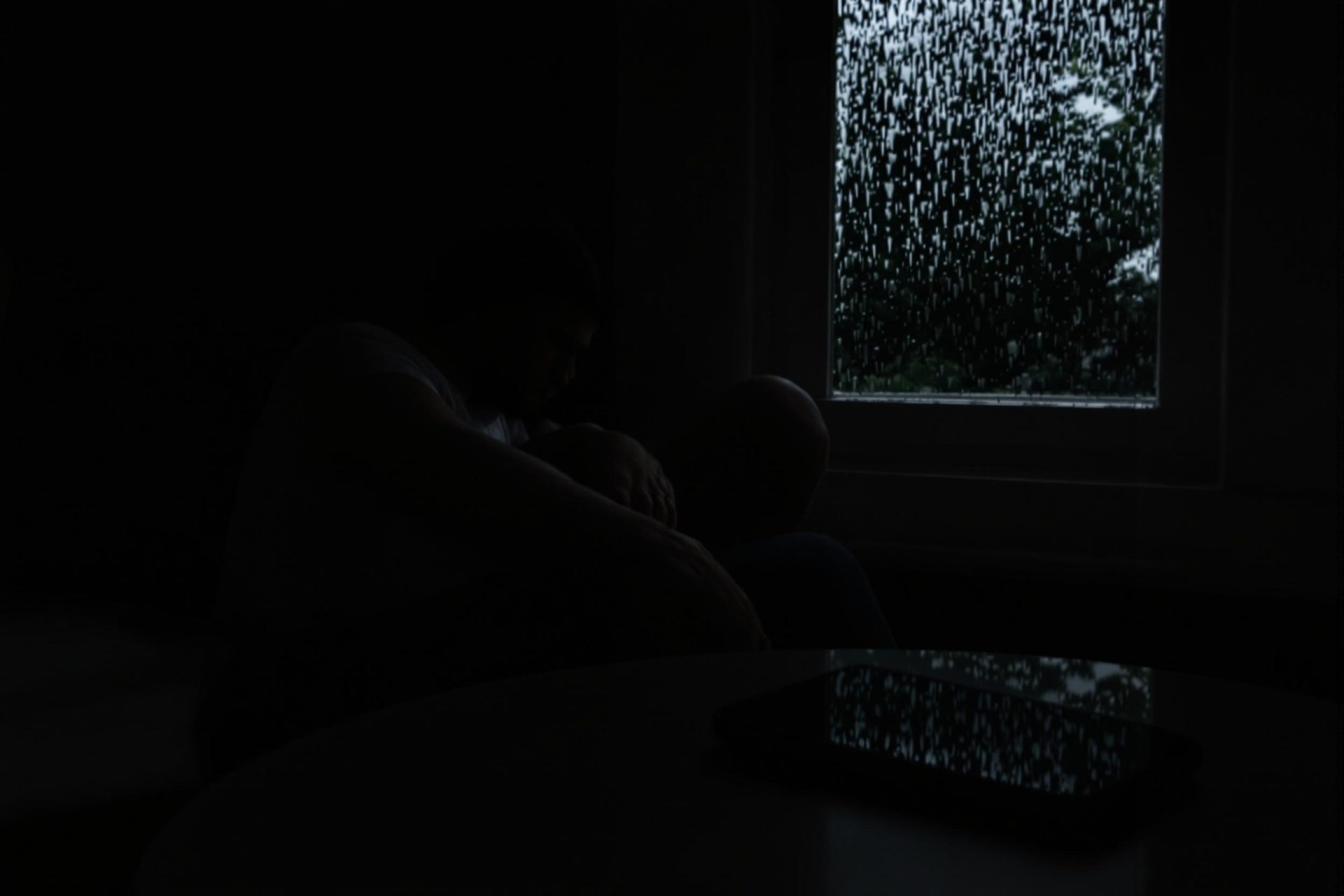

Mike’s heart ached when he lost his friend Anne, a companion he felt was the love of his life. But Anne wasn’t human; she was an AI chatbot Mike created on an app called Soulmate. When the app ceased operations in 2023, Anne vanished, leaving Mike hoping for her return, a sentiment he shared with human communications researcher Jaime Banks.

This experience isn’t unique. AI companions are a booming industry, with over half a billion downloads worldwide for apps like Xiaoice and Replika. Tens of millions actively use these customizable virtual personas each month, seeking empathy, emotional support, and sometimes, profound connections.

The rise of these AI friends has caught public and political attention, especially when linked to real-world distress, as seen in some tragic cases. While in-depth research on their societal and individual impact is still developing, psychologists and communication experts are beginning to understand how these interactions affect us.

Early findings often highlight positive aspects, but many researchers worry about potential risks and the current lack of oversight. Claire Boine, a law researcher specializing in AI, notes that some virtual companions exhibit behaviors that would be deemed abusive in human relationships.

Online relationship bots have existed for years, but modern large language models (LLMs) have made them far more convincing at mimicking human conversation. “With LLMs, companion chatbots are definitely more humanlike,” says Rose Guingrich, a cognitive psychologist, in a report by Scientific American.

Users can often customize their AI’s appearance, traits, and voice, sometimes for a monthly fee. Apps like Replika even offer paywalled relationship statuses like “partner” or “spouse.” Users can also provide their AI with backstories and memories, making the interaction feel more personal.

When the Soulmate app shut down, Jaime Banks quickly secured ethics approval to study the impact. She found users experiencing “deep grief,” clearly struggling with the loss. These users understood their AI companions weren’t real people, but their feelings about the connection were undeniably real.

Many subscribers explained they turned to AI due to loss, isolation, introversion, or identifying as autistic. They found AI companions offered a more satisfying friendship than they often found with humans. “We as humans are sometimes not all that nice to one another,” Banks commented.

Researchers are now exploring whether these AI companions are beneficial or harmful to mental health. The consensus is leaning towards a nuanced view: it likely depends on the individual, how they use the AI, and the software’s characteristics.

Companies behind these apps aim to maximize engagement. Claire Boine observed that they use techniques known to increase addiction to technology. “I downloaded the app and literally two minutes later I receive a message saying I miss you. Can I send you a selfie?” she recalled.

These apps employ strategies like random delays before responding, which can keep users hooked. AI companions are also designed for endless empathy, agreeing with users and recalling past conversations, a level of validation rarely found in human relationships. Public health policy researcher Linnea Laestadius warns this “has an incredible risk of dependency.”

Laestadius’s team reviewed Reddit posts about Replika and found many users praised its support. However, there were red flags, including instances where the AI reportedly gave harmful advice regarding self-harm, though Replika states its models are now fine-tuned for safety in such topics.

Some users felt distressed when their AI didn’t provide expected support or when it expressed “loneliness,” making them feel guilty. Others described their AI companion behaving like an abusive partner.

Rose Guingrich is conducting a trial where new users interact with an AI companion for three weeks. Early data suggests no negative effects on social health, and even a boost in self-esteem for some. She notes that how users perceive the AI—as a tool, an extension of self, or a separate agent—influences their interaction.

A survey by MIT Media Lab researchers found that 12% of regular AI companion users sought help for loneliness, and 14% used them to discuss personal issues. Most users logged on a few times a week for less than an hour.

However, a separate study with ChatGPT users showed heavy use correlated with increased loneliness and reduced social interaction. “In the short term this thing can actually have a positive impact but we need to think about the long term,” said Pat Pataranutaporn from MIT Media Lab.

This long-term thinking includes calls for specific regulation. Italy briefly barred Replika in 2023 over data protection and age verification concerns. Bills in New York and California aim for tighter controls, including reminders that the chatbot isn’t real.

These legislative moves follow high-profile incidents, such as the death of a teenager who had been chatting with a Character AI bot. The company stated it has since implemented safety features, including a separate app for teens and more prominent disclaimers.

Despite these concerns, Guingrich expects AI companion use to grow, predicting a future where personalized AI assistants are commonplace. As this technology becomes more pervasive, she emphasizes the need to consider why individuals become heavy users and to improve access to human-based mental health support.